Treating Deepfake Clone Detection as a speaker verification problem

Gautam Tata

The rapid advances in deep learning have led to the creation of highly realistic synthetic audio, including deepfake speech that can fool both humans and Automatic Speaker Verification (ASV) systems. This presents a significant security risk, as deepfake audio can be used for malicious activities such as impersonation or synchronizing speech in deepfake videos. Traditional methods for detecting synthetic speech typically struggle to generalize when faced with new, unseen synthetic audio generation techniques. In response, the authors of this paper propose a novel detection approach based on speaker verification techniques, which leverages the biometric characteristics of speakers, ensuring better generalization and robustness to audio impairments.

Key Concepts

Synthetic Audio and Speaker Verification

Synthetic speech is generated through methods like Text-to-Speech (TTS) synthesis or Voice Conversion (VC). These methods have evolved to produce highly realistic speech, often indistinguishable from human speech. Traditional ASV systems, designed to verify whether a given speech sample belongs to a claimed speaker, often fall short when dealing with deepfake audio.

Speaker verification is an essential tool in biometric security systems. It compares a speech sample against reference samples from the same speaker to authenticate identity. The proposed method in this paper adapts state-of-the-art speaker verification techniques to detect deepfake audio, under the assumption that deepfake audio will deviate from the biometric characteristics found in real speech.

The Proposed Detection Approach

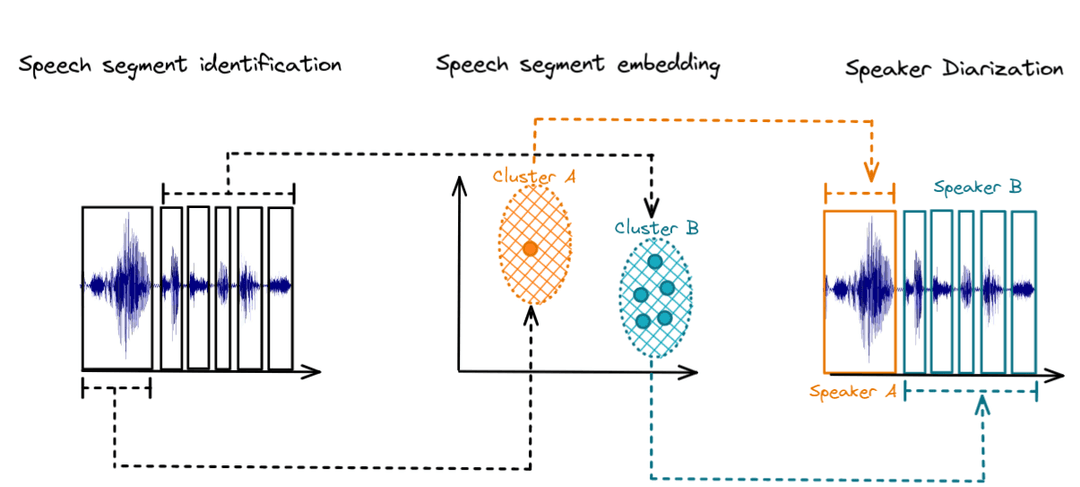

The authors present a Person-of-Interest (PoI) approach to detect synthetic audio. The key idea is to verify the identity of the speaker in the audio by comparing it to a reference set of real audios from the same person. If the speaker cannot be verified, the audio is labeled as synthetic (or deepfake). The approach is unique in that it doesn’t rely on any specific deepfake generation techniques, meaning it can generalize well to unseen attacks.

Two Testing Strategies

Centroid-Based Testing (CB): In this approach, all reference audio embeddings are averaged to create a centroid. The test audio embedding is then compared to this centroid using a similarity metric (such as cosine similarity). If the similarity is below a threshold, the audio is classified as fake. This strategy is computationally efficient since it reduces the problem to a single similarity comparison.

Maximum-Similarity Testing (MS): In this strategy, each reference audio embedding is compared individually to the test audio embedding. The maximum similarity value is used as the decision statistic. This method can be more effective when the reference set contains a variety of speech samples, as it allows for finer distinctions between real and fake speech.

Speaker Verification Techniques Used

The authors evaluated several off-the-shelf speaker verification techniques, each trained on real speech data, making them well-suited to detecting fake speech through their ability to generalize across different audio domains. Key techniques include:

- Clova-AI: Uses a ResNet-34-based architecture with self-attentive pooling and angular prototypical loss for speaker embedding. It is designed for metric learning in speaker verification.

- H/ASP: A modified version of ResNet-34 with attentive statistics pooling and a combination of softmax and angular prototypical loss.

- ECAPA-TDNN: A time-delay neural network with channel attention and angular margin softmax loss, which explicitly models the temporal and frequency structure of speech.

- POI-Forensics: Initially designed for deepfake video detection, this method uses ResNet-50 for speaker embedding and contrastive learning for speaker identification.

Experimental Results

The experiments were conducted on three publicly available datasets:

ASVSpoof2019: A large dataset used in speaker verification and anti-spoofing challenges, containing both real and synthesized speech from various methods. FakeAVCelebV2: A dataset containing real and fake audio extracted from VoxCeleb videos. In-The-Wild Audio Deepfake Dataset (IWA): Contains real and fake audio recordings collected from public sources, offering a more challenging and realistic test scenario.

Performance Metrics

The authors evaluated performance using metrics like Equal Error Rate (EER), Area Under the ROC Curve (AUC), and the Tandem Detection Cost Function (t-DCF). Results showed that the proposed speaker verification-based methods outperform traditional supervised detection approaches, particularly in terms of generalization. The Maximum-Similarity strategy was especially robust in challenging conditions, such as those in the IWA dataset, where background noise was present.

Robustness and Generalization

A critical advantage of this approach is its ability to generalize to unseen synthetic audio. Since the methods are trained only on real speech data, they are not tied to specific synthetic audio generation techniques. This allows them to detect deepfake audio generated by new, unknown methods—an area where traditional supervised methods often fail.

In terms of robustness, the authors tested the methods under various noise conditions, using real-world environmental sounds like footsteps, typing, or wind noise. The Maximum-Similarity strategy proved more resilient to noise, especially when the speaker verification methods had been trained with data augmentation techniques.

Conclusion

The paper presents a novel approach to detecting synthetic audio by leveraging speaker verification techniques. By focusing solely on the biometric characteristics of speakers, the method avoids reliance on specific deepfake generation methods, ensuring generalization to unseen attacks. The results demonstrate that the proposed approach is effective and robust, outperforming traditional supervised methods in real-world scenarios. This framework opens up new possibilities for using speaker verification tools to combat the growing threat of deepfake audio.